If you are reading this blog post via a 3rd party source it is very likely that many parts of it will not render correctly (usually, the interactive graphs). Please view the post on dogesec.com for the full interactive viewing experience.

tl;dr

I pitch OpenAI, Anthropic and Google against each other to see which one best understands MITRE ATT&CK

Some background…

A while ago we built txt2stix, a tool that automatically extracts intelligence from intel reports.

In the first versions of txt2stix we used only pattern based extractions.

These were perfect for IOC extractions (and still are) for strings that always follow a certain pattern, like IP addresses or URLs.

However, it was problematic for TTPs given there descriptive nature when being written about.

Our workaround in txt2stix was to use a lookup. It worked like this; 1) lookups contained the TTP value, e.g. Defense Evasion, 2) when the lookup extraction was triggered, the text of the report would be scanned for the string…

Here’s a good example of this using a completely fictitious report containing a reference to the MITRE ATT&CK Technique, Windows Management Instrumentation (T1047) and Malware, REvil (S0496) where I use the lookup extraction, lookup_mitre_attack_enterprise_name, to identify them.

You can also follow along with my examples by installing txt2stix and using the commands shown in this post.

python3 txt2stix.py \

--relationship_mode ai \

--ai_settings_relationships openai:gpt-4o \

--input_file tests/data/manually_generated_reports/mitre_attack_enterprise_lookup_demo.txt \

--name 'DOGESEC blog simple extraction demo' \

--tlp_level clear \

--confidence 100 \

--report_id 798586cf-85d4-5342-8990-5c5d7089a763 \

--use_extractions pattern_ipv4_address_only,pattern_domain_name_only,pattern_autonomous_system_number,pattern_file_hash_md5,lookup_mitre_attack_enterprise_name

Of course, these extractions are very useful, however, the reality is, in most reports TTPs they are not described as cleanly. The same txt2stix lookup extraction would not work if the input text did not contain a string that matched an ATT&CK ID or name exactly.

AI in txt2stix

As LLMs became mainstream txt2stix grew to support the use of LLMs for both extraction and relationship generation between extractions for some time now.

Above I showed the use of AI relationship generation in txt2stix. To generate relationships between extractions txt2stix passes them (as a structured JSON doc) along with the input text to the specified model. It asks the model to identify the relationships between the extractions and the description of that relationship using the default STIX relationship_type’s, where possible, returning the analysis as a structured JSON document txt2stix uses to create the relationships.

AI extractions are also fairly simplistic in their construction. You can see all the AI extractions that ship with txt2stix here.

Here’s the config for MITRE ATT&CK Enterprise we’ll use for this test;

ai_mitre_attack_enterprise:

type: ai

name: 'MITRE ATT&CK Enterprise'

description: ''

notes: ''

created: 2020-01-01

modified: 2020-01-01

created_by: DOGESEC

version: 1.0.0

prompt_base: 'Extract all references to MITRE ATT&CK Enterprise tactics, techniques, groups, data sources, mitigations, software, and campaigns described in the text. These references may not be explicit in the text so you should be careful to account for the natural language of the text your analysis. Do not include MITRE ATT&CK ICS or MITRE ATT&CK Mobile in the results.'

prompt_conversion: 'Convert all extractions into the corresponding ATT&CK ID.'

test_cases: generic_mitre_attack_enterprise

ignore_extractions:

-

stix_mapping: ctibutler-mitre-attack-enterprise-id

The prompt_base forms the key part of the prompt sent to the LLM, along with the input document. prompt_conversion is used as a secondary prompt to convert the extractions into a single structure (an ATT&CK ID) so that the corresponding ATT&CK Object can be identified in CTI Butler.

txt2stix also passes additional information in the prompts during processing, as shown here.

For the response, the LLM is asked to produce a structured JSON document for each extraction txt2stix then uses to model the data as STIX (using the stix_mapping defined in the config of the extraction).

Comparing models

I recently added support for Anthropic and Google models to txt2stix in addition to OpenAI and our custom built models. As such I decided it would be a nice exercise to see how each performed when asked to extract TTPs found in intel reports.

I have purposely chosen to use MITRE ATT&CK mapping for TTP extraction because it is a well-documented and widely-used framework, and one AI models trained on internet data would likely have come across.

In this test I’ll use the latest flagship models from each provider at the time of writing;

openai:gpt-4oanthropic:claude-3-5-sonnet-latestgemini:models/gemini-1.5-pro-latest

I’ve also included our private model dogesec:muchsec-0.4 and compare to the BERTClassifierModel used in MITRE’s TRAM.

I’ll use a very simple sentence to see how they perform for ATT&CK extraction;

REvil uses WMI to execute malicious commands to reference a retrieved PE file through a path modification.

Which as an analyst I’d classify with the ATT&CK objects;

- Windows Management Instrumentation (T1047)

- REvil (S0496)

- Execution (TA0002)

Lets see what the AIs identify;

openai:gpt-4o

python3 txt2stix.py \

--relationship_mode ai \

--ai_settings_relationships openai:gpt-4o \

--input_file tests/data/manually_generated_reports/mitre_attack_enterprise_ai_demo.txt \

--name 'DOGESEC blog openai:gpt-4o simple ATT&CK extraction' \

--tlp_level clear \

--confidence 100 \

--use_extractions ai_mitre_attack_enterprise \

--report_id 558f8fda-9727-48c3-b8d2-717874ed49ff \

--ai_settings_extractions openai:gpt-4o

- ✅ Windows Management Instrumentation (T1047)

- ✅ REvil (S0496)

- ❌ Execution (TA0002)

anthropic:claude-3-5-sonnet-latest

python3 txt2stix.py \

--relationship_mode ai \

--ai_settings_relationships anthropic:claude-3-5-sonnet-latest \

--input_file tests/data/manually_generated_reports/mitre_attack_enterprise_ai_demo.txt \

--name 'DOGESEC blog anthropic:claude-3-5-sonnet-latest simple ATT&CK extraction' \

--tlp_level clear \

--confidence 100 \

--use_extractions ai_mitre_attack_enterprise \

--report_id 9fedfa49-dd59-4f12-9b3e-96e664751d46 \

--ai_settings_extractions anthropic:claude-3-5-sonnet-latest

- ✅ Windows Management Instrumentation (T1047)

- ❌ REvil (S0496)

- ❌ Execution (TA0002)

- ➕ Path Interception by PATH Environment Variable (T1574.007)

gemini:models/gemini-1.5-pro-latest

python3 txt2stix.py \

--relationship_mode ai \

--ai_settings_relationships gemini:models/gemini-1.5-pro-latest \

--input_file tests/data/manually_generated_reports/mitre_attack_enterprise_ai_demo.txt \

--name 'DOGESEC blog gemini:models/gemini-1.5-pro-latest extraction simple ATT&CK extraction' \

--tlp_level clear \

--confidence 100 \

--use_extractions ai_mitre_attack_enterprise \

--report_id 4d7705b6-650d-45c7-9844-e74f66c3d9a6 \

--ai_settings_extractions gemini:models/gemini-1.5-pro-latest

- ✅ Windows Management Instrumentation (T1047)

- ❌ REvil (S0496)

- ❌ Execution (TA0002)

- ➕ PowerShell (T1059.001)

- ➕ PowerShell Profile (T1546.013)

dogesec:muchsec-0.4

python3 txt2stix.py \

--relationship_mode ai \

--ai_settings_relationships dogesec:muchsec-0.4 \

--input_file tests/data/manually_generated_reports/mitre_attack_enterprise_ai_demo.txt \

--name 'DOGESEC blog dogesec:muchsec-0.4 simple ATT&CK extraction' \

--tlp_level clear \

--confidence 100 \

--use_extractions ai_mitre_attack_enterprise \

--report_id fcb593fc-c7ae-4253-8a2a-fdf2cc198d14 \

--ai_settings_extractions dogesec:muchsec-0.4

Note, you cannot run this model in txt2stix unless your a DOGESEC customer.

- ✅ Windows Management Instrumentation (T1047)

- ✅ REvil (S0496)

- ✅ Execution (TA0002)

MITRE TRAM

- ✅ Windows Management Instrumentation (T1047)

- ❌ REvil (S0496)

- ❌ Execution (TA0002)

MITRE TRAM is only trained on 50 Techniques, describe here, so this outcome could have easily been predicted.

In summary

For a long time, AI models were aware of ATT&CK Techniques, but no other ATT&CK objects. OpenAI’s GPT-4o now understands more than just Techniques, as it detected REvil (Malware). All other models only detected ATT&CK Techniques.

Anthropic’s Claude identified a Sub-Technique, Path Interception by PATH Environment Variable (T1574.007), which is not described in the sentence. Similarly Google’s Gemini makes the assumption PowerShell was used, despite there being no reference to it.

Ultimately all off-the-shelf models are still way behind models specifically trained on threat intel for TTP extraction, so-much-so I have not even bothered to include the results of more complex inputs in this post.

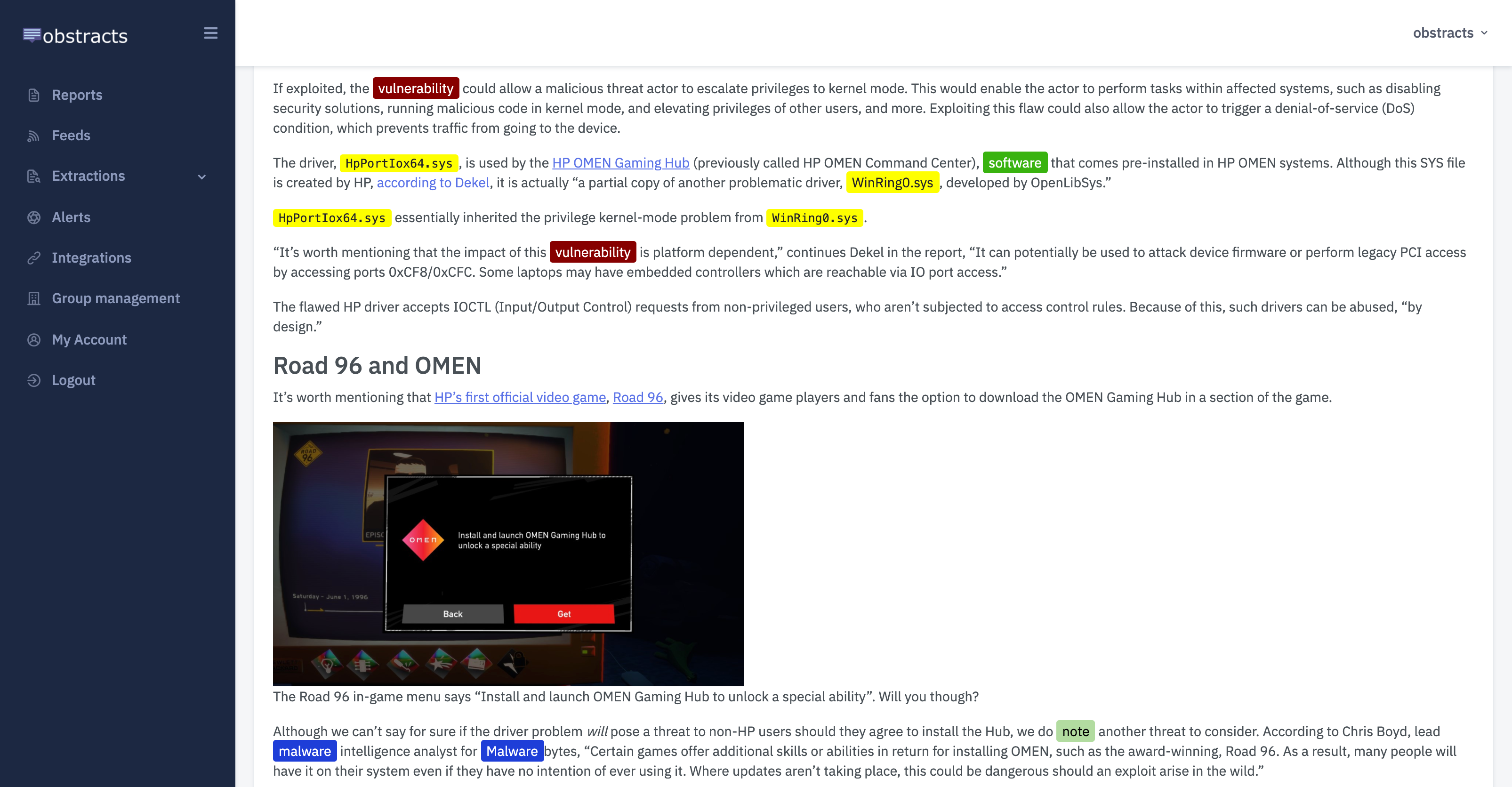

Obstracts

The RSS reader for threat intelligence teams. Turn any blog into machine readable STIX 2.1 data ready for use with your security stack.

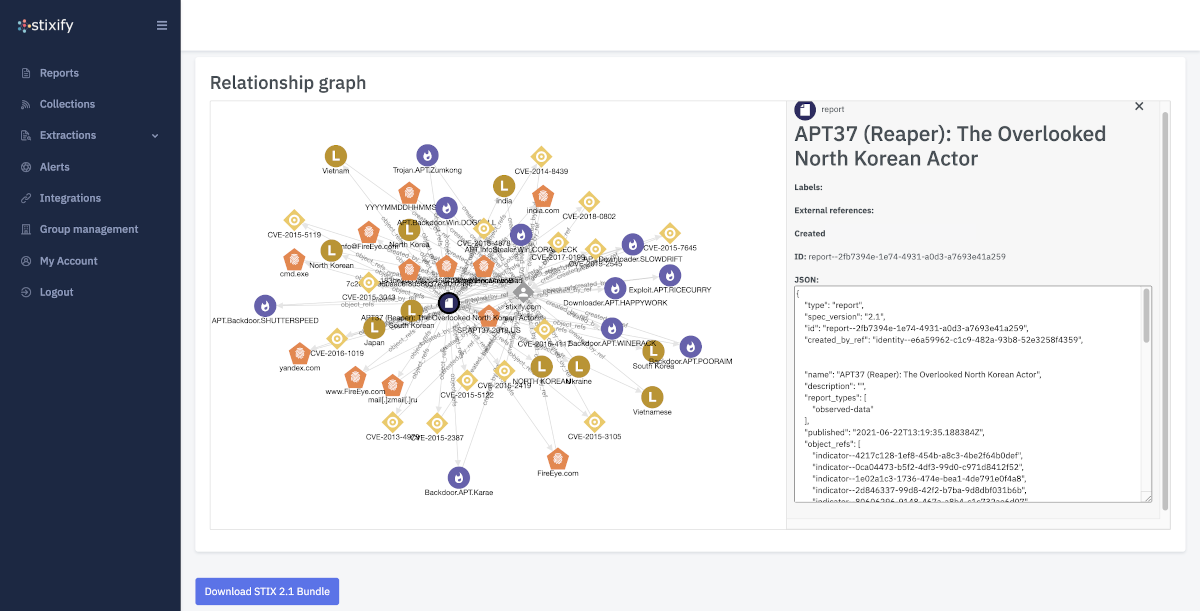

Stixify

Your automated threat intelligence analyst. Extract machine readable STIX 2.1 data ready for use with your security stack.

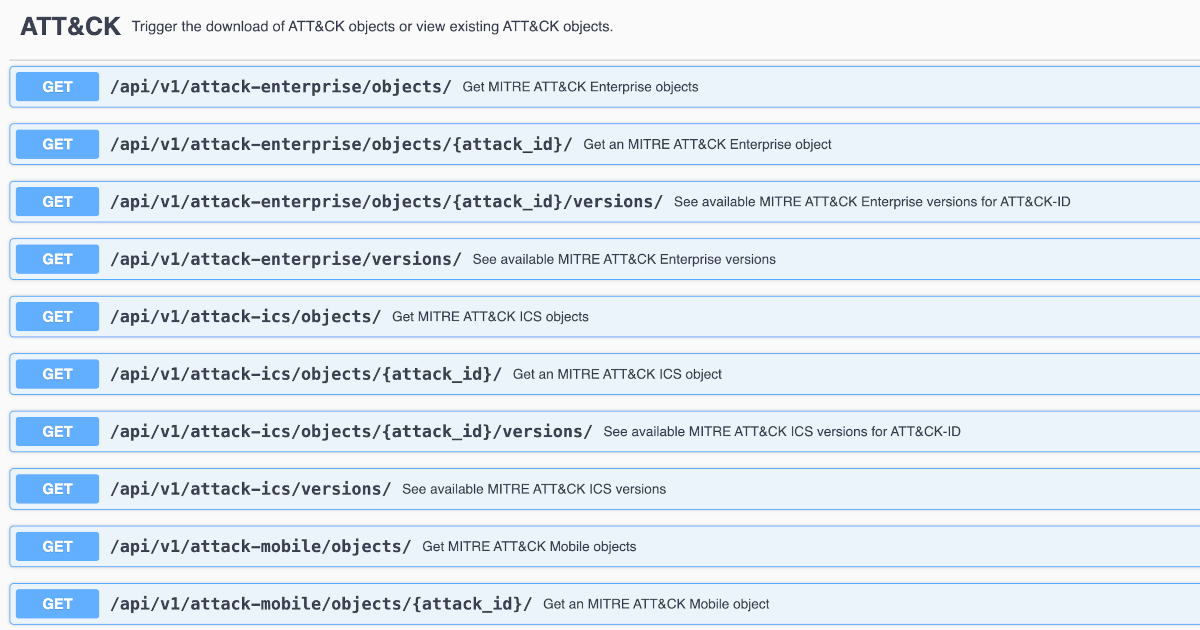

CTI Butler

One API. Much CTI. CTI Butler is the API used by the world's leading cyber-security companies.

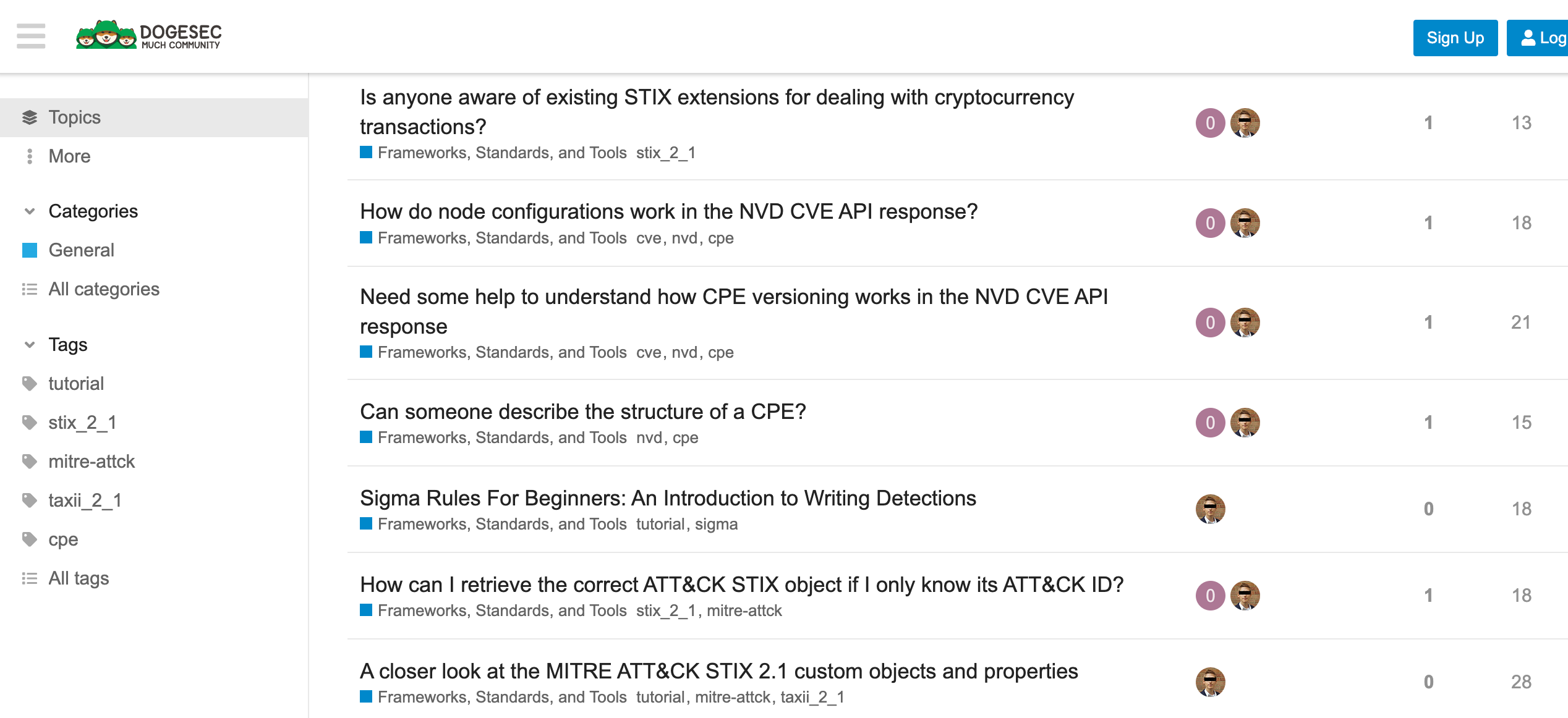

Discuss this post

Head on over to the dogesec community to discuss this post.

Never miss an update

Sign up to receive new articles in your inbox as they published.