If you are reading this blog post via a 3rd party source it is very likely that many parts of it will not render correctly (usually, the interactive graphs). Please view the post on dogesec.com for the full interactive viewing experience.

tl;dr

A short lesson in why building a product with a single point of failure is bad (duh!), and our hunt for a Wayback Machine alternative.

Overview

ICYMI, the Internet Archive (the owners of Wayback Machine) have been breached.

By all accounts the attack continues with ongoing DDOS campaigns knocking archive.org and openlibrary.org offline.

Whether this is being conducted by the same actors as the original breach is unknown. The two things I have learned from this attack is; 1) just how much of an intelligence analysts work relies on the work of the Internet Archive, and 2) when the Wayback Machine down, history4feed breaks!

A short recap

history4feed creates a full historical archive for any blog.

It does this using the Wayback Machine.

This approach is fairly reliable, but not perfect. Sometimes Wayback Machine archives miss time periods, or simply don’t index blogs at all. This is especially a problem with newer or niche blogs, though the Wayback Machine also has blind-spots to highly trafficked sites too.

However, more critically; when the Wayback Machine goes down, history4feed loses the ability to create the historic backfill for any site.

The knee jerk reaction

As the bug tickets for history4feed started flowing in, so did the panic.

Our immediate thoughts were to seek alternatives to the Wayback Machine, adding them in as a backup service for situations like this.

The problem is, there isn’t really anything else like the Wayback Machine.

So we promptly decided to turn to the Internet Archive’s torrents, but quickly remembered how unreliable they are.

I used to solely depend on Wayback machine to automate archiving pages. Now, I am archiving webpages using selenium python package on https://archive.ph/ and https://ghostarchive.org/.

Whilst a good idea going forward, it was useless at the time being unable to create retrospective backups.

Then we started following a thread of building blog scrapers. AIs are getting better and better at this task, and there are a number of companies offering services designed to do exactly this.

The problem being; the cost. Many users of history4feed do not have budget for these services.

If I failed at following the mantra, don’t put all your eggs in one basket, I didn’t want to fall for another, don’t reinvent the wheel.

Going down the AI thread got us thinking about some sort of web crawler.

Our focus is cyber-security blogs, so using our list of Awesome Threat Intel Blogs, I set out to identify the root domains with a robots.txt file that pointed to a sitemap file.

Building a basic blog crawler

Most of these sites pointed to a sitemap file.

e.g. https://www.crowdstrike.com/robots.txt;

User-agent: *

Sitemap: https://www.crowdstrike.com/sitemap_index.xml

Sitemap: https://www.crowdstrike.com/blog/sitemap_index.xml

Sitemap: https://www.crowdstrike.com/falcon-sitemap.xml

As you can see the robots.txt file can return one or more Sitemap entries.

I’m new to web crawling and quickly learned that robots.txt files often link to sitemap indexes (a collection of sitemap files).

Going back, a sitemap file has the XML structure;

<?xml version="1.0" encoding="UTF-8"?>

<urlset xmlns="http://www.sitemaps.org/schemas/sitemap/0.9">

<url>

<loc>http://www.example.com/</loc>

<lastmod>2024-10-01</lastmod>

<changefreq>daily</changefreq>

<priority>1.0</priority>

</url>

<url>

<loc>http://www.example.com/about</loc>

<lastmod>2024-09-25</lastmod>

<changefreq>weekly</changefreq>

<priority>0.8</priority>

</url>

<url>

<loc>http://www.example.com/contact</loc>

<lastmod>2024-09-20</lastmod>

<changefreq>monthly</changefreq>

<priority>0.5</priority>

</url>

</urlset>

Where loc is a link to a page on the site.

For sites with a large number of pages, many sitemaps often exist for different parts of the site (e.g. blog, marketing pages, etc). These are managed by sitemap indexes. A sitemap index looks like this;

<?xml version="1.0" encoding="UTF-8"?>

<sitemapindex xmlns="http://www.sitemaps.org/schemas/sitemap/0.9">

<sitemap>

<loc>http://example.com/sitemap1.xml</loc>

<lastmod>2024-10-14</lastmod>

</sitemap>

<sitemap>

<loc>http://example.com/sitemap2.xml</loc>

<lastmod>2024-10-13</lastmod>

</sitemap>

<sitemap>

<loc>http://example.com/sitemap3.xml</loc>

<lastmod>2024-10-12</lastmod>

</sitemap>

</sitemapindex>

Where loc is the location of the actual sitemap file.

You can see a real example of a sitemap index using the Crowdstrike example again; https://www.crowdstrike.com/sitemap_index.xml. Here is a short snippet of the sitemap index file;

<?xml version="1.0" encoding="UTF-8"?><?xml-stylesheet type="text/xsl" href="https://www.crowdstrike.com/wp-content/plugins/wordpress-seo/css/main-sitemap.xsl"?>

<sitemapindex xmlns="http://www.sitemaps.org/schemas/sitemap/0.9">

<sitemap>

<loc>https://www.crowdstrike.com/post-sitemap.xml</loc>

<lastmod>2024-10-10T17:21:20+00:00</lastmod>

</sitemap>

<sitemap>

<loc>https://www.crowdstrike.com/post-sitemap2.xml</loc>

<lastmod>2024-10-10T17:21:20+00:00</lastmod>

</sitemap>

<sitemap>

<loc>https://www.crowdstrike.com/page-sitemap.xml</loc>

<lastmod>2024-10-10T18:51:15+00:00</lastmod>

</sitemap>

<sitemap>

<loc>https://www.crowdstrike.com/adversary-universe-sitemap.xml</loc>

<lastmod>2024-09-27T13:57:52+00:00</lastmod>

</sitemap>

<sitemap>

<loc>https://www.crowdstrike.com/epp101-sitemap.xml</loc>

<lastmod>2024-10-03T18:43:30+00:00</lastmod>

</sitemap>

<sitemap>

<loc>https://www.crowdstrike.com/es-free-trial-guide-sitemap.xml</loc>

<lastmod>2021-09-17T12:18:58+00:00</lastmod>

</sitemap>

If you examine the Crowdstrike sitemap index, you’ll see it points to a range of sitemaps. Not all of them contain pages related to blog posts.

Thus, simply gathering all the URLs in a sitemap would work for archiving the entire site, however, history4feed is only interested in blog posts.

In the Crowdstrike example that is all those with a URL starting with https://www.crowdstrike.com/blog/, of which you can see many examples here: https://www.crowdstrike.com/post-sitemap.xml.

<url>

<loc>https://www.crowdstrike.com/blog/how-to-defend-employees-data-as-social-engineering-evolves/</loc>

<lastmod>2024-03-22T14:25:47+00:00</lastmod>

<image:image>

<image:loc>https://www.crowdstrike.com/wp-content/uploads/2021/06/Blog_0520_08-1.jpeg</image:loc>

</image:image>

</url>

<url>

<loc>https://www.crowdstrike.com/blog/new-emphasis-on-an-old-problem-patch-management-and-accountability/</loc>

<lastmod>2024-03-22T15:01:01+00:00</lastmod>

<image:image>

<image:loc>https://www.crowdstrike.com/wp-content/uploads/2020/03/patch-blog-1.jpg</image:loc>

</image:image>

</url>

<url>

<loc>https://www.crowdstrike.com/blog/crowdstrike-and-ey-join-forces/</loc>

<lastmod>2024-03-22T15:07:57+00:00</lastmod>

<image:image>

<image:loc>https://www.crowdstrike.com/wp-content/uploads/2021/05/CS_EY_Blog_1060x698_v2-1.jpeg</image:loc>

</image:image>

</url>

Thus an additional step is needed to filter URLs out that do not start with a particular string (in this case https://www.crowdstrike.com/blog/).

Though having only a list of URLs was not enough. One of the big benefits of using RSS or ATOM feeds directly is that the metadata of the blog and each post, including post title, publish date, and author, is captured in the feed itself.

Using the sitemap approach gives us a list of URLs and their lastmod times, but nothing more.

This is where we had to make a decision;

- the fully featured approach: build out an AI pipeline that would read the blog page and try to identify the title, post date, author, etc.

- the simplistic approach: get the HTML

titletag as the post title, and use thelastmodas the post publish time.

Both approaches are problematic.

For option 2, HTML title tags do usually contain the title of the post, but also other branding (like site name). lastmod times are often incorrect too because posts are often updated. Even worse, some sites bulk update this time on any change to the site.

Option 1 would likely be more accurate, but still risks errors through hallucinations, etc.

Ultimately we decided to try the simplistic approach, deeming the AI approach overkill for history4feed because the main requirement as it stands was to get the actual post content. There is also the cost issue.

The comforting thing being, we can always revisit this decision in the future should we need to change tack.

A proof of concept

To test this idea out we built out a POC, sitemap2posts.

It is fairly simple piece of code;

- A user enters the base URL of a blog. e.g.

https://www.crowdstrike.com/blog/(and any other filters they want to apply) - A list of URLs and last modified times is created from the sitemaps reported by the domains

robots.txtfile - All the URLs that do not start with URL entered by the user (or the filters applied at step 1) are filtered out

- A json file of all posts is produced

Let me show you with an example you can follow along with.

Once you’ve installed sitemap2posts (described here). You can run it like so;

python3 sitemap2posts.py https://www.crowdstrike.com/blog/ \

--lastmod_min 2024-01-01 \

--ignore_sitemaps https://www.crowdstrike.com/page-sitemap.xml,https://www.crowdstrike.com/epp101-sitemap.xml,https://www.crowdstrike.com/author-sitemap.xml \

--ignore_domain_paths https://www.crowdstrike.com/blog/author,https://www.crowdstrike.com/blog/videos/ \

--remove_404_records \

--output crowdstrike_blog.json

The script will then output a file in the following structure for each post identified that matches the criteria set;

{

"url": "https://www.crowdstrike.com/en-us/blog/malicious-inauthentic-falcon-crash-reporter-installer-spearphishing/",

"lastmod": "2024-09-21",

"title": "Malicious Inauthentic Falcon Crash Reporter Installer Distributed to German Entity",

"sitemap": "https://www.crowdstrike.com/post-sitemap2.xml"

},

{

"url": "https://www.crowdstrike.com/en-us/blog/malicious-inauthentic-falcon-crash-reporter-installer-ciro-malware/",

"lastmod": "2024-09-21",

"title": "Malicious Inauthentic Falcon Crash Reporter Installer Delivers Malware Named Ciro",

"sitemap": "https://www.crowdstrike.com/post-sitemap2.xml"

},

{

"url": "https://www.crowdstrike.com/en-us/blog/making-threat-graph-extensible-part-2/",

"lastmod": "2024-09-05",

"title": "How Threat Graph Leverages DSL to Improve Data Ingestion, Part 2",

"sitemap": "https://www.crowdstrike.com/post-sitemap2.xml"

}

Backfilling posts in history4feed

The next step in the process was to allow users to take the crawled posts and add them to history4feed.

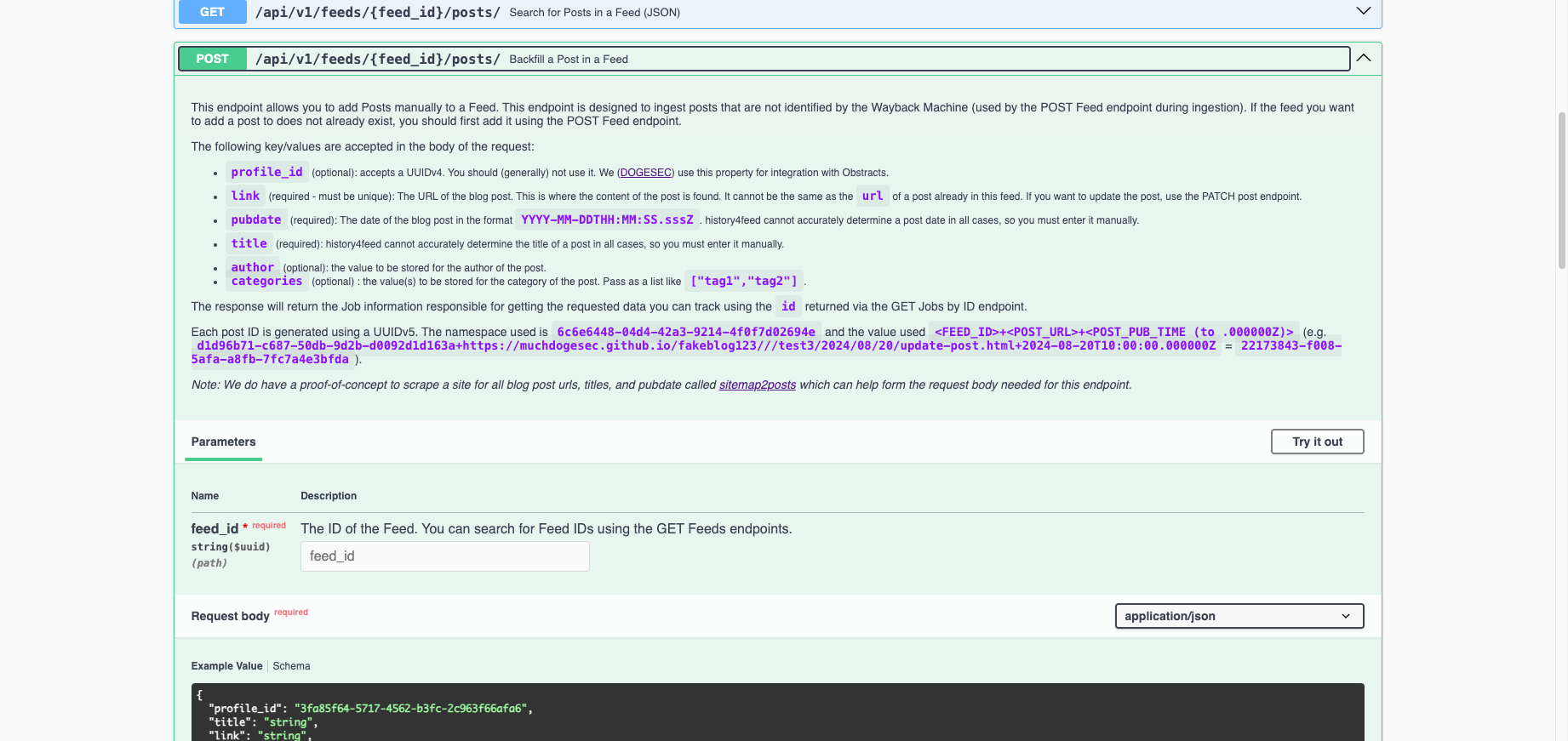

To do this we added a new endpoint where users can POST a Post to an existing feed.

For example, adding the first post in the last JSON file shown;

curl -X 'POST' \

'http://localhost:8002/api/v1/feeds/d1d96b71-c687-50db-9d2b-d0092d1d163a/posts/' \

-H 'accept: application/json' \

-H 'Content-Type: application/json' \

-d '{

"title": "Malicious Inauthentic Falcon Crash Reporter Installer Distributed to German Entity",

"link": "https://www.crowdstrike.com/en-us/blog/malicious-inauthentic-falcon-crash-reporter-installer-spearphishing/",

"pubdate": "2024-09-21T00:00:00.000Z"

}'

This request will then trigger a job for history4feed to visit the link specified and retrieve the full text of the article.

Once that job completes, you can get the full post content using its ID as follows;

curl -X 'GET' \

'http://localhost:8002/api/v1/feeds/d1d96b71-c687-50db-9d2b-d0092d1d163a/posts/9841d81f-0cbc-5f10-988f-fe9b298ebb1e/' \

-H 'accept: application/json'

{

"id": "9841d81f-0cbc-5f10-988f-fe9b298ebb1e",

"profile_id": null,

"datetime_added": "2024-10-24T06:25:13.074778Z",

"datetime_updated": "2024-10-24T06:25:18.408575Z",

"title": "Malicious Inauthentic Falcon Crash Reporter Installer Distributed to German Entity",

"description": "<html><body><div><span><h2>Summary</h2>\n<p>On July 24, 2024, CrowdStrike Intelligence identified an unattributed spearphishing attempt delivering an inauthentic CrowdStrike Crash Reporter installer via a website impersonating a German entity. The website was registered with a sub-domain registrar. Website artifacts indicate the domain was likely created on July 20, 2024, one day after an issue present in a single content update for CrowdStrike’s Falcon sensor — which impacted Windows operating systems — was identified and a fix was deployed.</p>\n<p>After the user clicks the Download button, the website leverages JavaScript (JS) that masquerades as JQuery v3.7.1 to download and deobfuscate the installer. The installer...",

"link": "https://www.crowdstrike.com/en-us/blog/malicious-inauthentic-falcon-crash-reporter-installer-spearphishing/",

"pubdate": "2024-09-21T00:00:00Z",

"author": null,

"is_full_text": true,

"content_type": "text/html;charset=utf-8",

"added_manually": true,

"categories": []

}

(I’ve removed some of the content in the description for brevity here.)

This approach solves two of our problems;

- if a Wayback Machine archive is unavailable, and

- if a Wayback Machine archive is available but is missing certain posts

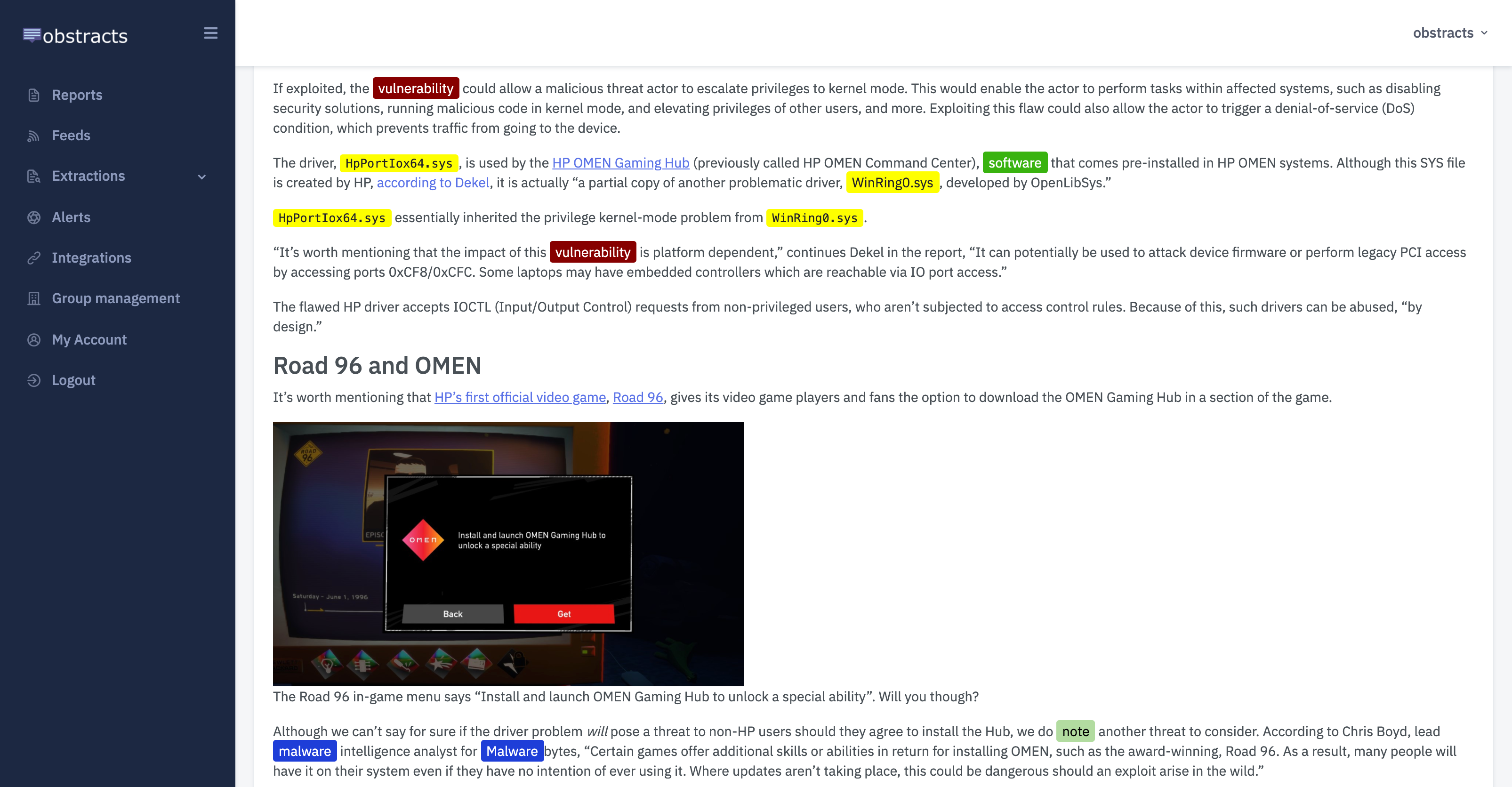

Obstracts

The RSS reader for threat intelligence teams. Turn any blog into machine readable STIX 2.1 data ready for use with your security stack.

Discuss this post

Head on over to the dogesec community to discuss this post.

Never miss an update

Sign up to receive new articles in your inbox as they published.